Apple is set to elevate the capabilities of its Vision Pro headset by introducing advanced AI-driven features and a dedicated spatial content app, aiming to redefine immersive computing, Reuters reported.

As Apple prepares for the global rollout of Vision Pro, the company is reportedly integrating on-device AI capabilities, enhancing user interactions, and boosting productivity within the visionOS ecosystem. The AI-driven tools are expected to optimize spatial computing experiences, improving navigation, voice recognition, and real-time content generation.

Apple is also working on a spatial content app that will serve as a central hub for interactive AR/VR experiences, supporting both first-party and third-party content. This aligns with Apple’s long-term vision of creating a rich ecosystem where developers can build and distribute immersive applications, including AI-powered virtual assistants, enhanced media experiences, and productivity tools.

Apple has been gradually integrating AI-powered enhancements across its ecosystem, with Siri improvements, real-time object recognition, and predictive computing likely making their way into Vision Pro. These AI tools could significantly enhance the device’s spatial awareness, improving its ability to adapt to users’ environments dynamically.

PM Modi: WAVES will empower Indian content creators go global

PM Modi: WAVES will empower Indian content creators go global  Meta rolls out ‘Teen Accounts’ feature to FB, Messenger

Meta rolls out ‘Teen Accounts’ feature to FB, Messenger  Govt. says pvt. sector TV channels can ride pubcaster’s WAVES

Govt. says pvt. sector TV channels can ride pubcaster’s WAVES  Sunny Deol says ready for fresh starts with streaming projects

Sunny Deol says ready for fresh starts with streaming projects  Govt. amends FM Radio e-auction norms

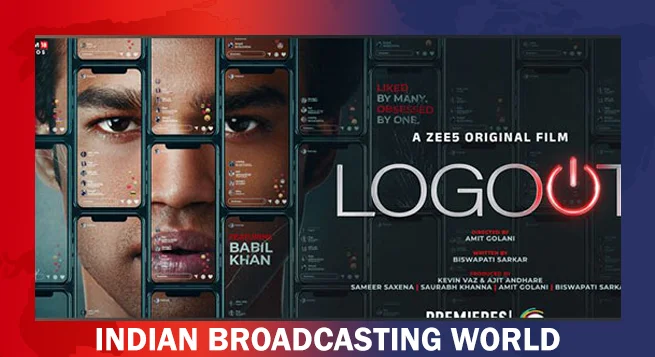

Govt. amends FM Radio e-auction norms  Babil Khan’s ‘Logout’ trailer unveiled

Babil Khan’s ‘Logout’ trailer unveiled  Nickelodeon India #1 kids’ channel for 11th year

Nickelodeon India #1 kids’ channel for 11th year  Pro Panja League S2 starts Aug 5 on Sony Sports

Pro Panja League S2 starts Aug 5 on Sony Sports