An Australian regulator has sent legal letters to social media platforms from YouTube, X and Facebook to Telegram and Reddit, demanding they hand over information about their efforts to stamp out terrorism content.

The e-Safety Commission said it was concerned the platforms were not doing enough to stop extremists from using live-streaming features, algorithms and recommendation systems to recruit users, a Reuters report from Sydney, Australia stated yesterday.

Since 2022, the regulator has had the power to demand big tech firms give information about the prevalence of illegal content and their measures to prevent that content from spreading. Failure to do so can result in hefty fines.

Inman Grant said Telegram was the most used by violent extremist groups to radicalise and recruit.

The Dubai-based messaging service, which a 2022 OECD report ranked at No. 1 for frequency of terrorism content, did not immediately respond to a Reuters request for comment.

“We don’t know if they actually have the people and resources in place to even be able to respond to these notices, but we will see,” Commissioner Julie Inman Grant told Reuters in an interview.

“We’re not going to be afraid to take it as far as we need to, to get the answers we need or to find them out of compliance and fine them,” she added.

She also said that second-ranked YouTube “has the power through their clever algorithms to spread propaganda broadly … in a very overt way or a very subtle way that sends people down rabbit holes.”

Subjects considered terrorism ranged from responses to the wars in Ukraine and Gaza to violent conspiracy theories to “misogynistic tropes that spill over into real-world violence against women”, she added.

The regulator has previously sent legal letters to platforms seeking information about the handling of child abuse material and hate speech, but its anti-terrorism blitz has been the most complex because of the wide range of content and methods of amplifying content, Inman Grant said.

Elon Musk‘s X was handed the first e-Safety fine in 2023 over its response to questions about its handling of child abuse content. It is challenging the $386,000 fine in the court.

A spokesperson for Facebook owner Meta said the company was reviewing the commission’s notices and that “there is no place on our platforms for terrorism, extremism, or any promotion of violence or hate”.

Delhi HC orders meta to remove deepfake videos of Rajat Sharma

Delhi HC orders meta to remove deepfake videos of Rajat Sharma  Govt. blocked 18 OTT platforms for obscene content in 2024

Govt. blocked 18 OTT platforms for obscene content in 2024  Broadcasting industry resists inclusion under Telecom Act

Broadcasting industry resists inclusion under Telecom Act  DTH viewing going down & a hybrid ecosystem evolving: Dish TV CEO

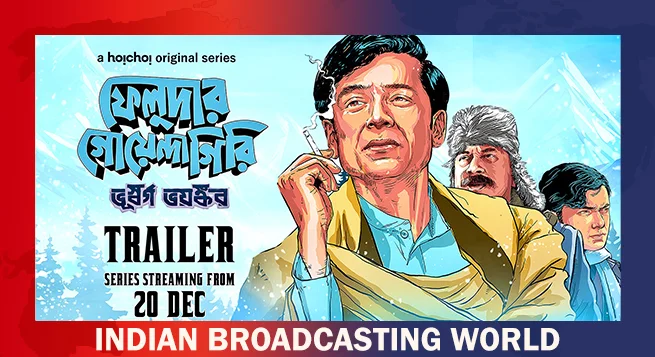

DTH viewing going down & a hybrid ecosystem evolving: Dish TV CEO  New adventure of detective Feluda debuts on Hoichoi Dec. 20

New adventure of detective Feluda debuts on Hoichoi Dec. 20  ‘Pushpa 2’ breaks records as most watched film of 2024: BookMyShow Report

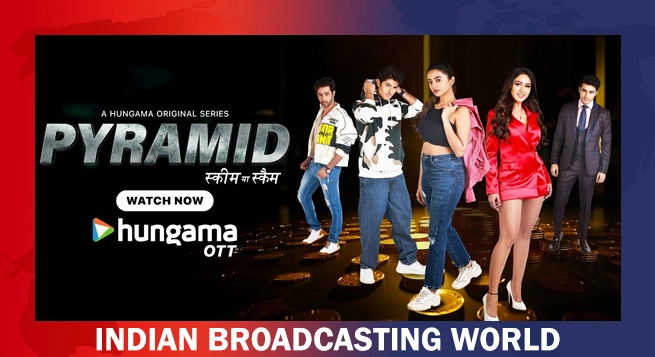

‘Pushpa 2’ breaks records as most watched film of 2024: BookMyShow Report  Hungama OTT unveils ‘Pyramid’

Hungama OTT unveils ‘Pyramid’  Amazon MX Player to premiere ‘Party Till I Die’ on Dec 24

Amazon MX Player to premiere ‘Party Till I Die’ on Dec 24  aha Tamil launches ‘aha Find’ initiative with ‘Bioscope’

aha Tamil launches ‘aha Find’ initiative with ‘Bioscope’  Netflix India to stream WWE content starting April 2025

Netflix India to stream WWE content starting April 2025