Even as Australian pressure on tech companies and social media platforms to be made responsible for defamatory content hosted by them has galvanized the companies into action, a senior executive from Facebook in Washington said Sunday that the company would start nudging youngsters away from harmful content.

A tech body backed by the Australian units of Facebook, Google and Twitter said on Monday it has set up a special committee to adjudicate complaints over misinformation, a day after the government threatened tougher laws over false and defamatory online posts, Reuters reported from Sydney.

The issue of damaging social media posts has emerged as a second battlefront between Big Tech and Australia, which last year passed a law to make platforms pay licence fees for content, sparking a temporary Facebook blackout in February.

Prime Minister Scott Morrison last week labeled social media “a coward’s palace”, while the government said on Sunday it was looking at measures to make social media companies more responsible, including forcing legal liability onto the platforms for the content published on them.

The Digital Industry Group Inc. (DIGI), which represents the Australian units of Facebook Inc., Alphabet’s Google and Twitter Inc., said its new misinformation oversight sub-committee showed the industry was willing to self-regulate against damaging posts.

The tech giants had already agreed a code of conduct against misinformation, “and we wanted to further strengthen it with independent oversight from experts, and public accountability”, DIGI Managing Director Sunita Bose said in a statement.

A three-person “independent complaints sub-committee” would seek to resolve complaints about possible breaches of the code conduct via a public website, DIGI said, but would not take complaints about individual posts.

The industry’s code of conduct includes items such as taking action against misinformation affecting public health, which would include the novel coronavirus.

DIGI, which also counts Apple Inc. and TikTok as signatories, said it could issue a public statement if a company was found to have violated the code of conduct or revoke its signatory status with the group.

Australian Communications minister Paul Fletcher, who has been among senior lawmakers promising tougher action against platforms hosting misleading and defamatory content, welcomed the measure, while consumer groups argued it did not go far enough.

“I’m pleased that DIGI is announcing an important development to strengthen the way the code will protect Australians from misinformation and disinformation,” Fletcher said in a statement.

But Reset Australia, an advocate group focused on the influence of technology on democracy, said the oversight panel was “laughable” as it involved no penalties and the code of conduct was optional.

“DIGI’s code is not much more than a PR stunt given the negative PR surrounding Facebook in recent weeks,” said Reset Australia Director of tech policy Dhakshayini Sooriyakumaran in a statement, urging regulation for the industry.

FB To Nudge Teens Away From Harmful Content:Meanwhile, a Facebook Inc. executive said Sunday that the company would introduce new measures on its apps to prompt teens away from harmful content, as U.S lawmakers scrutinize how Facebook and subsidiaries like Instagram affect young people’s mental health, according to a Reuters dispatch from Washington.

Nick Clegg, Facebook’s VP of global affairs, also expressed openness to the idea of letting regulators have access to Facebook algorithms that are used to amplify content. But Clegg said he could not answer the question whether its algorithms amplified the voices of people who had attacked the U.S. Capitol on January 6.

The algorithms “should be held to account, if necessary, by regulation so that people can match what our systems say they’re supposed to do from what actually happens,” Clegg told CNN’s ‘State of the Union’.

He spoke days after former Facebook employee and whistleblower Frances Haugen testified on Capitol Hill about how the company entices users to keep scrolling, harming teens’ well being.

“We’re going to introduce something which I think will make a considerable difference, which is where our systems see that the teenager is looking at the same content over and over again and it’s content which may not be conducive to their well-being, we will nudge them to look at other content,” Clegg told CNN.

In addition, “we’re introducing something called, ‘take a break,’ where we will be prompting teens to just simply just take a break from using Instagram,” Clegg said.

U.S. senators last week grilled Facebook on its plans to better protect young users on its apps, drawing on leaked internal research that showed the social media giant was aware of how its Instagram app damaged the mental health of youth.

Senator Amy Klobuchar, a Democrat who chairs the Senate Judiciary Committee’s antitrust subcommittee, has argued for more regulation against technology companies like Facebook.

Clegg noted that Facebook had recently put on hold its plans for developing Instagram Kids, aimed at pre-teens, and was introducing new optional controls for adults to supervise teens.

Delhi HC orders meta to remove deepfake videos of Rajat Sharma

Delhi HC orders meta to remove deepfake videos of Rajat Sharma  Govt. blocked 18 OTT platforms for obscene content in 2024

Govt. blocked 18 OTT platforms for obscene content in 2024  Broadcasting industry resists inclusion under Telecom Act

Broadcasting industry resists inclusion under Telecom Act  DTH viewing going down & a hybrid ecosystem evolving: Dish TV CEO

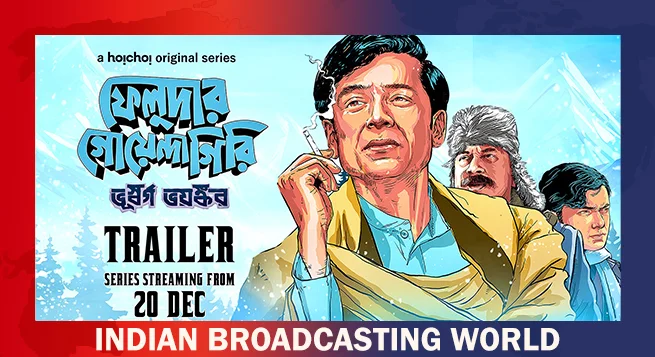

DTH viewing going down & a hybrid ecosystem evolving: Dish TV CEO  New adventure of detective Feluda debuts on Hoichoi Dec. 20

New adventure of detective Feluda debuts on Hoichoi Dec. 20  ‘Pushpa 2’ breaks records as most watched film of 2024: BookMyShow Report

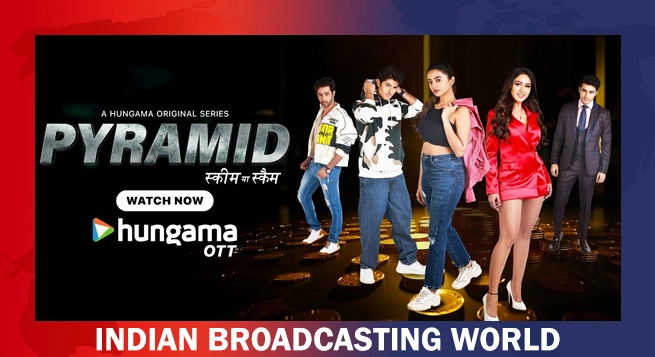

‘Pushpa 2’ breaks records as most watched film of 2024: BookMyShow Report  Hungama OTT unveils ‘Pyramid’

Hungama OTT unveils ‘Pyramid’  Amazon MX Player to premiere ‘Party Till I Die’ on Dec 24

Amazon MX Player to premiere ‘Party Till I Die’ on Dec 24  aha Tamil launches ‘aha Find’ initiative with ‘Bioscope’

aha Tamil launches ‘aha Find’ initiative with ‘Bioscope’  Netflix India to stream WWE content starting April 2025

Netflix India to stream WWE content starting April 2025