Meta has announced two new generative AI tools called Emu Video and Emu Edit for high-quality video and image creation.

In this year’s Meta Connect, the company announced several new developments, including Emu, its first foundational model for image generation, IANS reported from SanFrancisco.

“With Emu Video, which leverages our Emu model, we present a simple method for text-to-video generation based on diffusion models. This is a unified architecture for video generation tasks that can respond to a variety of inputs: text only, image only, and both text and image,” Meta explained in a blog post late on Thursday.

The team splits the process into two steps: first, generating images conditioned on a text prompt, and then generating video conditioned on both the text and the generated image.

“This split approach to video generation lets us train video generation models efficiently,” said the company.

Emu Edit is a novel approach that aims to streamline various image manipulation tasks and bring enhanced capabilities and precision to image editing.

Emu Edit is capable of free-form editing through instructions, encompassing tasks such as local and global editing, removing and adding a background, color, and geometry transformations, detection and segmentation, and more.

“Unlike many generative AI models today, Emu Edit precisely follows instructions, ensuring that pixels in the input image unrelated to the instructions remain untouched,” said Meta.

While certainly no replacement for professional artists and animators, Emu Video, Emu Edit, and new technologies like them could help people express themselves in new ways.

WAVES’ Bharat Pavillion to showcase Indian media’s evolution,culture

WAVES’ Bharat Pavillion to showcase Indian media’s evolution,culture  Telecom subs base up marginally; Trai withholds updated b’band data

Telecom subs base up marginally; Trai withholds updated b’band data  WAVES driven by industry; govt just a catalyst: Vaishnaw

WAVES driven by industry; govt just a catalyst: Vaishnaw  In officials’ reshuffle, Shankar moves out of MIB; Prabhat comes in

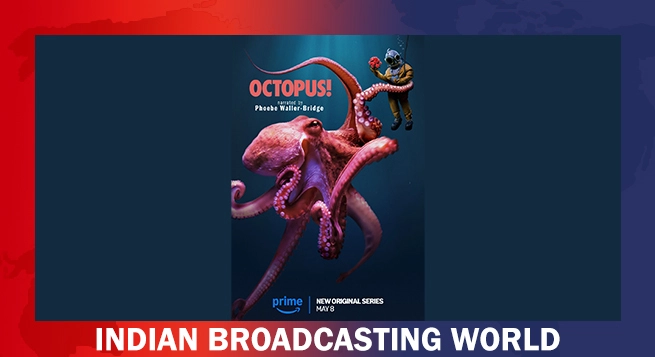

In officials’ reshuffle, Shankar moves out of MIB; Prabhat comes in  Phoebe Waller-Bridge dives deep in Prime Video’s new docuseries ‘Octopus!’

Phoebe Waller-Bridge dives deep in Prime Video’s new docuseries ‘Octopus!’  WION launches ‘Prime Crime’, a gripping new global investigative series

WION launches ‘Prime Crime’, a gripping new global investigative series  Park Bo-gum’s ‘Good Boy’ gears up for its grand premiere on Prime Video

Park Bo-gum’s ‘Good Boy’ gears up for its grand premiere on Prime Video  Rajkummar Rao joins Sun King as brand ambassador

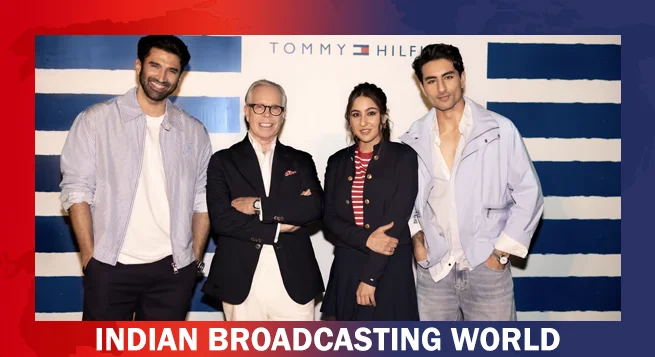

Rajkummar Rao joins Sun King as brand ambassador  Tommy Hilfiger shines in Mumbai with high-fashion store

Tommy Hilfiger shines in Mumbai with high-fashion store