Meta Platforms Inc’s independent oversight board said on Thursday that starting this month it can decide on applying warning screens, marking content as “disturbing” or “sensitive”.

The board, which already has the ability to review user appeals to remove content, said it would be able to make binding decisions to apply a warning screen when “leaving up or restoring qualifying content”, including photos and videos.

Separately in its quarterly transparency report, a Reuters report stated that the board said it received 347,000 appeals from Facebook and Instagram users around the world during the second quarter ended June 30.

“Since we started accepting appeals two years ago, we have received nearly two million appeals from users around the world,” the board report said.

“This demonstrates the ongoing demand from users to appeal Meta’s content moderation decisions to an independent body,” it said.

The oversight board, which includes academics, rights experts and lawyers, was created by the company to rule on a small slice of thorny content moderation appeals, but it can also advise on site policies.

Last month, it objected to Facebook’s removal of a newspaper report about the Taliban that it considered positive, backing users’ freedom of expression and saying the tech company relied too heavily on automated moderation.

Draft data protection rules aims to balance rules & innovation: Minister

Draft data protection rules aims to balance rules & innovation: Minister  WWE, Netflix endeavour to make wrestling big hit globally

WWE, Netflix endeavour to make wrestling big hit globally  No timeline yet for satcom spectrum recommendations: TRAI chief

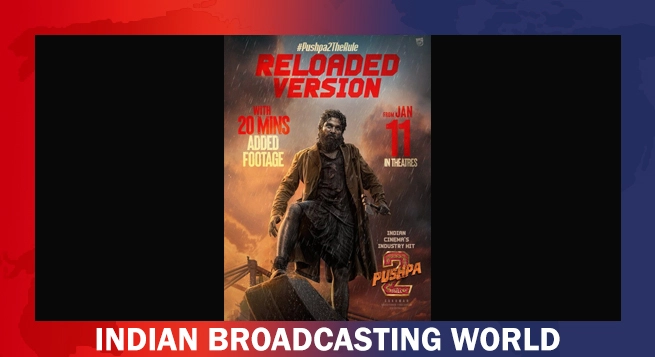

No timeline yet for satcom spectrum recommendations: TRAI chief  ‘Pushpa 2’ Reloaded: 20 extra minutes of footage added for extended theatrical run

‘Pushpa 2’ Reloaded: 20 extra minutes of footage added for extended theatrical run  Ankit Goyle joins Snap Inc. as head of India marketing

Ankit Goyle joins Snap Inc. as head of India marketing  Havas India appoints Manas Lahiri as chief growth officer

Havas India appoints Manas Lahiri as chief growth officer  ‘Ramayana: The Legend of Prince Rama’ set for Indian theatrical release Jan 24

‘Ramayana: The Legend of Prince Rama’ set for Indian theatrical release Jan 24  Discovery+ raises subscription prices in 2025

Discovery+ raises subscription prices in 2025