Meta Platforms’ Oversight Board is reviewing the company’s handling of two sexually explicit AI-generated images of female celebrities that circulated on its Facebook and Instagram services, the board said yesterday.

The board, which is funded by the social media giant but operates independently from it, will use the two examples to assess the overall effectiveness of Meta‘s policies and enforcement practices around pornographic fakes created using artificial intelligence, it said in a blog post, according to a Reuters report from New York.

It provided descriptions of the images in question but did not name the famous women depicted in them in order to “prevent further harm,” a board spokesperson said.

Advances in AI technology have made fabricated images, audio clips and videos virtually indistinguishable from real human-created content, resulting in a spate of sexual fakes proliferating online, mostly depicting women and girls.

In an especially high-profile case earlier this year, Elon Musk-owned social media platform X briefly blocked users from searching for all images of U.S. pop star Taylor Swift after struggling to control the spread of fake explicit images of her.

Some industry executives have called for legislation to criminalize the creation of harmful “deep fakes” and require that tech companies prevent such uses of their products.

According to the Oversight Board’s descriptions of its cases, one involves an AI-generated image of a nude woman resembling a public figure from India, posted by an account on Instagram that only shares AI-generated images of Indian women.

In a separate post, Meta acknowledged the cases and pledged to implement the board’s decisions.

JioStar secures 20 top brands for TATA IPL 2025

JioStar secures 20 top brands for TATA IPL 2025  After Airtel, Reliance announces pact with Starlink

After Airtel, Reliance announces pact with Starlink  In a surprise move, Airtel joins hands with Musk’s Starlink for India

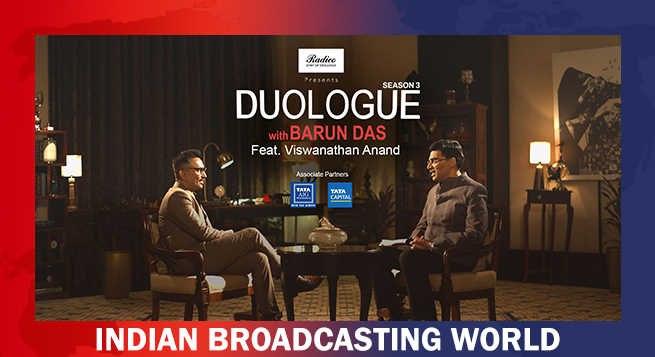

In a surprise move, Airtel joins hands with Musk’s Starlink for India  Viswanathan Anand on chess, AI, India’s rise in ‘Duologue with Barun Das’

Viswanathan Anand on chess, AI, India’s rise in ‘Duologue with Barun Das’  ‘Ponman’ to stream on JioHotstar from March 14

‘Ponman’ to stream on JioHotstar from March 14  ‘Shin Chan’ set for Indian theatrical debut

‘Shin Chan’ set for Indian theatrical debut  Olivier Richters credits Alan Ritchson for his casting in ‘Reacher’ S3

Olivier Richters credits Alan Ritchson for his casting in ‘Reacher’ S3  JioHotstar shatters streaming record with CT final

JioHotstar shatters streaming record with CT final